原创 HDFS 常用命令

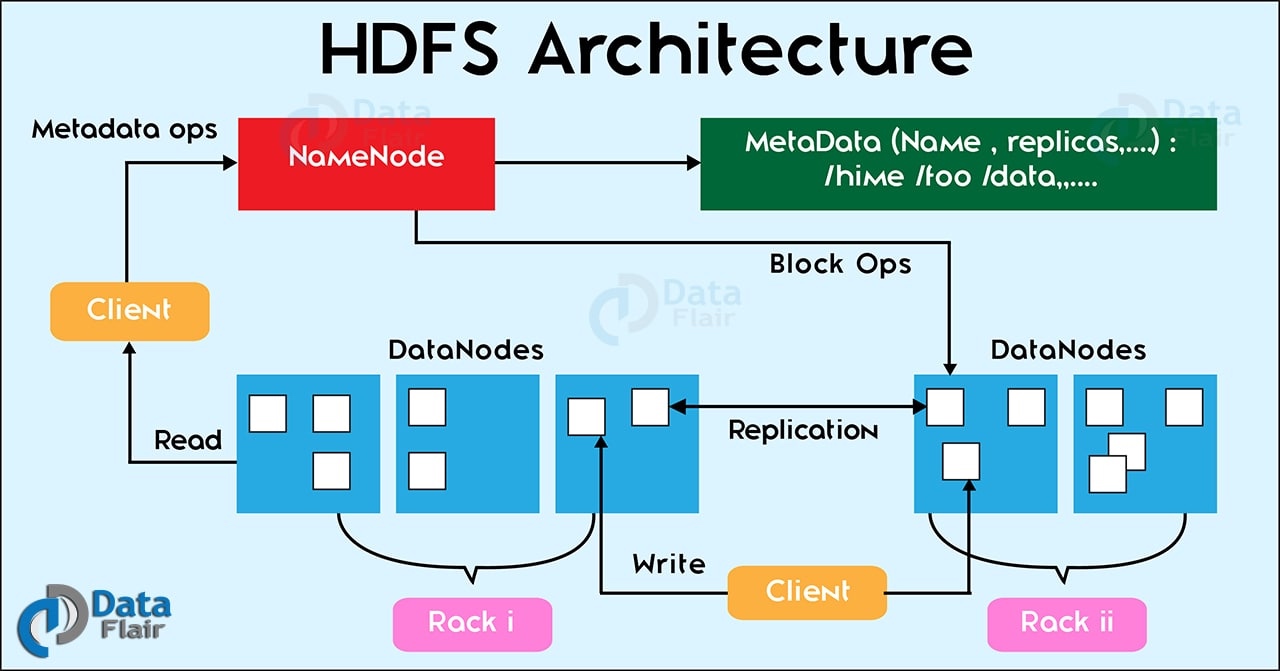

HDFS 是分布式文件系统,为 Hadoop 生态提供文件存储。

有了 HDFS,Hive 可以关注 meta 部分来构建数据仓库,把物理存储给到 HDFS,同理 HBase、Druid 也是。 此外 Spark on YARN,也需要 HDFS,存储运行环境、日志等文件,Mapreduce 就更是如此了。

接下来了解下 HDFS 常见的命令。

常见命令: hdfs dfs

针对文件系统的操作,主要是 hdfs dfs,大致参数结构为: hfs dfs + - + 类 Linux 命令

比如查看根目录文件列表

# hdfs dfs -ls hdfs://hadoop-10.com:8020/

Found 5 items

drwxr-xr-x - hdfs supergroup 0 2021-05-22 17:58 hdfs://hadoop-10.com:8020/data

drwxr-xr-x - hdfs supergroup 0 2021-05-17 16:48 hdfs://hadoop-10.com:8020/kylin

drwxrwxrwt - hdfs supergroup 0 2021-06-03 19:44 hdfs://hadoop-10.com:8020/tmp

drwxr-xr-x - hdfs supergroup 0 2021-05-18 13:34 hdfs://hadoop-10.com:8020/user

1

2

3

4

5

6

2

3

4

5

6

如果提示没有权限,可以设置执行用户的环境变量(export HADOOP_USER_NAME=hdfs)

还有另一种方式是

sudo -u hdfs hdfs dfs -ls /

如果设置了 Hadoop 配置文件的环境变量(export HADOOP_CONF_DIR=/etc/hadoop/conf),则可以不需要指定 HDFS URL。

# hdfs dfs -ls /

Found 5 items

drwxr-xr-x - hdfs supergroup 0 2021-05-22 17:58 /data

drwxr-xr-x - hdfs supergroup 0 2021-05-17 16:48 /kylin

drwxrwxrwt - hdfs supergroup 0 2021-06-03 19:44 /tmp

drwxr-xr-x - hdfs supergroup 0 2021-05-18 13:34 /user

1

2

3

4

5

6

2

3

4

5

6

- 查看容量

# hdfs dfs -df -h

Filesystem Size Used Available Use%

hdfs://hadoop-10.com:8020 1.7 T 95.5 G 1.3 T 5%

1

2

3

2

3

- 上传文件到 HDFS

# hdfs dfs -put README.md /tmp/xxxx/

# hdfs dfs -ls /tmp/xxx/

Found 1 items

-rw-r--r-- 3 hdfs supergroup 2245 2020-05-17 18:43 /tmp/xxx/README.md

1

2

3

4

2

3

4

- 创建文件夹

# hdfs dfs -mkdir /data/software

# hdfs dfs -ls /data/

Found 2 items

drwxr-xr-x - hdfs supergroup 0 2020-05-17 08:16 /data/cloudrea_manager

drwxr-xr-x - hdfs supergroup 0 2021-05-01 09:36 /data/software

1

2

3

4

5

2

3

4

5

- 下载文件

# hdfs dfs -ls -h /data/sampledata

Found 2 items

-rw-r--r-- 2 hdfs supergroup 8.7 M 2021-05-22 22:37 /data/sampledata/GeoLite2-City-Locations-zh-CN.csv

# hdfs dfs -get /data/sampledata/GeoLite2-City-Locations-zh-CN.csv /tmp/

# ls -lh /tmp/GeoLite2-City-Locations-zh-CN.csv

-rw-r--r-- 1 root root 8.7M 6月 27 19:19 /tmp/GeoLite2-City-Locations-zh-CN.csv

1

2

3

4

5

6

2

3

4

5

6

- 删除文件 删除文件夹,加 -r

# hdfs dfs -rm /data/software/jdk-8u202-linux-x64.rpm

21/05/01 09:39:31 INFO fs.TrashPolicyDefault: Moved: 'hdfs://hadoop-10.com:8020/data/software/jdk-8u202-linux-x64.rpm' to trash at: hdfs://hadoop-10.com:8020/user/hdfs/.Trash/Current/data/software/jdk-8u202-linux-x64.rpm

1

2

2

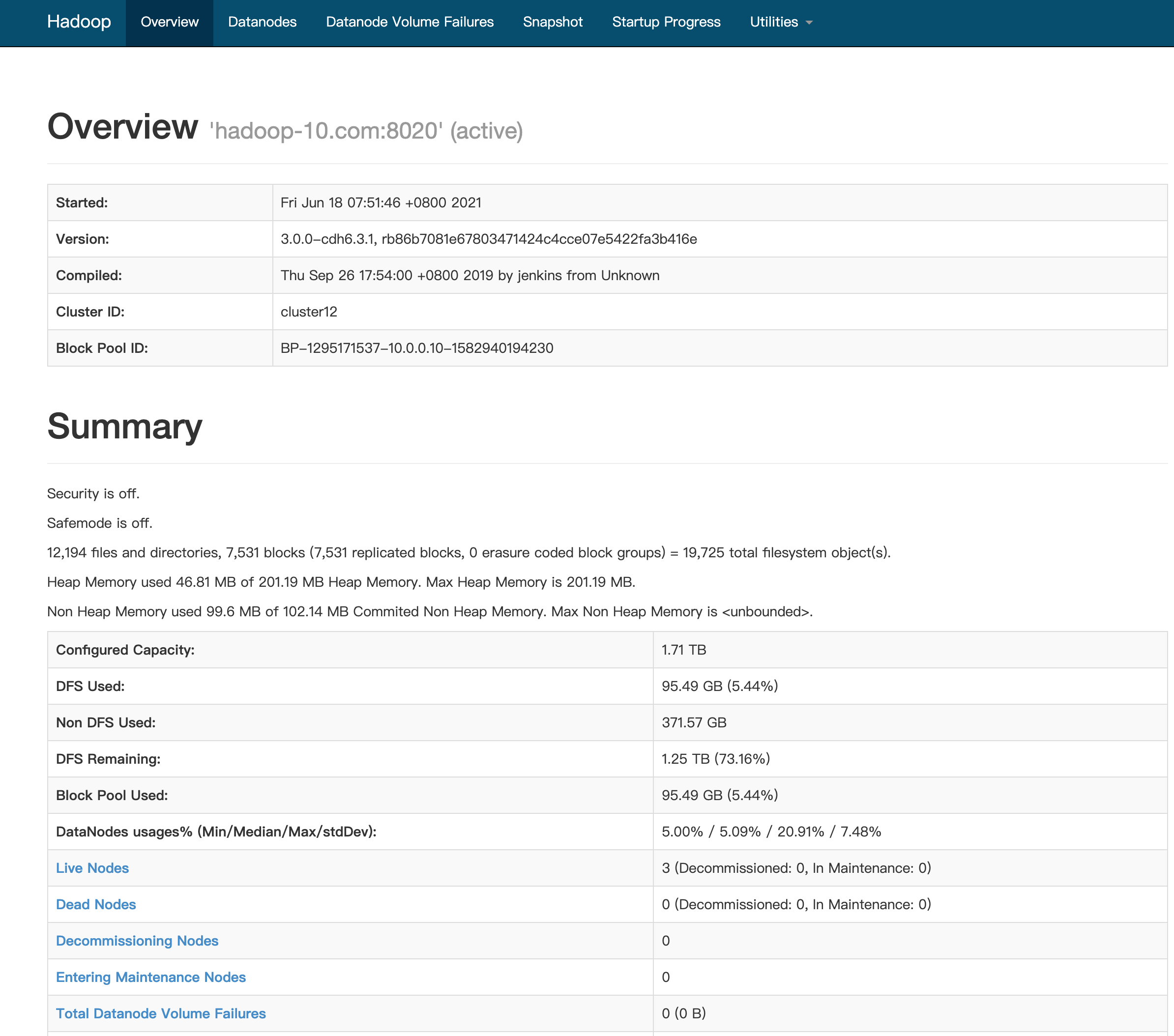

管理操作: hdfs dfsadmin

查看集群层面的容量信息

# hdfs dfsadmin -report

Configured Capacity: 1883712503399 (1.71 TB)

Present Capacity: 1480559898697 (1.35 TB)

DFS Remaining: 1378036129865 (1.25 TB)

DFS Used: 102523768832 (95.48 GB)

DFS Used%: 6.92%

Replicated Blocks:

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

Missing blocks (with replication factor 1): 0

Low redundancy blocks with highest priority to recover: 0

Pending deletion blocks: 0

Erasure Coded Block Groups:

Low redundancy block groups: 0

Block groups with corrupt internal blocks: 0

Missing block groups: 0

Low redundancy blocks with highest priority to recover: 0

Pending deletion blocks: 0

-------------------------------------------------

Live datanodes (3):

Name: 10.0.0.29:9866 (hadoop-29.com)

Hostname: hadoop-29.com

Rack: /default

Decommission Status : Normal

Configured Capacity: 918136558797 (855.08 GB)

DFS Used: 45897576448 (42.75 GB)

Non DFS Used: 199899391181 (186.17 GB)

DFS Remaining: 672210081868 (626.04 GB)

DFS Used%: 5.00%

DFS Remaining%: 73.21%

Configured Cache Capacity: 1787822080 (1.67 GB)

Cache Used: 0 (0 B)

Cache Remaining: 1787822080 (1.67 GB)

Cache Used%: 0.00%

Cache Remaining%: 100.00%

Xceivers: 4

Last contact: Sun Jun 27 19:45:01 CST 2021

Last Block Report: Sun Jun 27 17:17:24 CST 2021

Name: 10.0.0.30:9866 (hadoop-30.com)

Hostname: hadoop-30.com

Rack: /default

Decommission Status : Normal

Configured Capacity: 47439385805 (44.18 GB)

DFS Used: 9919037440 (9.24 GB)

Non DFS Used: 0 (0 B)

DFS Remaining: 34152836709 (31.81 GB)

DFS Used%: 20.91%

DFS Remaining%: 71.99%

Configured Cache Capacity: 1787822080 (1.67 GB)

Cache Used: 0 (0 B)

Cache Remaining: 1787822080 (1.67 GB)

Cache Used%: 0.00%

Cache Remaining%: 100.00%

Xceivers: 12

Last contact: Sun Jun 27 19:45:01 CST 2021

Last Block Report: Sun Jun 27 19:28:58 CST 2021

Name: 10.0.0.46:9866 (hadoop-46.com)

Hostname: hadoop-46.com

Rack: /default

Decommission Status : Normal

Configured Capacity: 918136558797 (855.08 GB)

DFS Used: 46707154944 (43.50 GB)

Non DFS Used: 199089812685 (185.42 GB)

DFS Remaining: 671673211288 (625.54 GB)

DFS Used%: 5.09%

DFS Remaining%: 73.16%

Configured Cache Capacity: 1787822080 (1.67 GB)

Cache Used: 0 (0 B)

Cache Remaining: 1787822080 (1.67 GB)

Cache Used%: 0.00%

Cache Remaining%: 100.00%

Xceivers: 12

Last contact: Sun Jun 27 19:45:01 CST 2021

Last Block Report: Sun Jun 27 19:13:30 CST 2021

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

和在 web 上看的差不多

查看目录的 block 数open in new window

检查目录或文件是否正常

# hadoop fsck /user/hive/warehouse/test/ -files -blocks

Status: HEALTHY

Number of data-nodes: 2

Number of racks: 1

Total dirs: 24

Total symlinks: 0

Replicated Blocks:

Total size: 404271784 B (Total open files size: 873 B)

Total files: 13967 (Files currently being written: 1)

Total blocks (validated): 13967 (avg. block size 28944 B) (Total open file blocks (not validated): 1)

Minimally replicated blocks: 13967 (100.0 %)

Over-replicated blocks: 0 (0.0 %)

Under-replicated blocks: 13967 (100.0 %)

Mis-replicated blocks: 0 (0.0 %)

Default replication factor: 3

Average block replication: 2.0

Missing blocks: 0

Corrupt blocks: 0

Missing replicas: 13967 (33.333332 %)

Blocks queued for replication: 0

Erasure Coded Block Groups:

Total size: 0 B

Total files: 0

Total block groups (validated): 0

Minimally erasure-coded block groups: 0

Over-erasure-coded block groups: 0

Under-erasure-coded block groups: 0

Unsatisfactory placement block groups: 0

Average block group size: 0.0

Missing block groups: 0

Corrupt block groups: 0

Missing internal blocks: 0

Blocks queued for replication: 0

FSCK ended at Wed May 19 13:31:30 CST 2021 in 318 milliseconds

The filesystem under path '/user/hive/warehouse/test' is HEALTHY

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

hdfs 设置副本数open in new window

全局默认设置在 hdfs-site.xml 文件中。

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

1

2

3

4

2

3

4

可以单独对部分文件或目录设置副本数

通过 -du 可以看到有3份副本

# hdfs dfs -setrep 3 /data/sampledata/GeoLite2-City-Locations-zh-CN.csv

Replication 3 set: /data/sampledata/GeoLite2-City-Locations-zh-CN.csv

# hdfs dfs -du /data/sampledata/GeoLite2-City-Locations-zh-CN.csv

9100366 27301098 /data/sampledata/GeoLite2-City-Locations-zh-CN.csv

# hdfs dfs -ls /data/sampledata/GeoLite2-City-Locations-zh-CN.csv

-rw-r--r-- 3 hdfs supergroup 9100366 2021-06-09 17:37 /data/sampledata/GeoLite2-City-Locations-zh-CN.csv

1

2

3

4

5

6

2

3

4

5

6

reference

- [1] HDFS Tutorials. What is HDFS Architecture?open in new window

- [2] Hadoop. HDFS Architectureopen in new window